The Autonomous Agent Era

We've talked about how AI is transforming coding today. Now let's look at where this is headed—and why understanding the trajectory matters even if you're focused on learning current tools.

The evolution is clear: code completion → function generation → feature implementation → autonomous agents. We're transitioning from tools that assist developers to agents that can complete complex, multi-step tasks with minimal human supervision.

This isn't science fiction. It's happening now, and the timeline is shorter than most people realize.

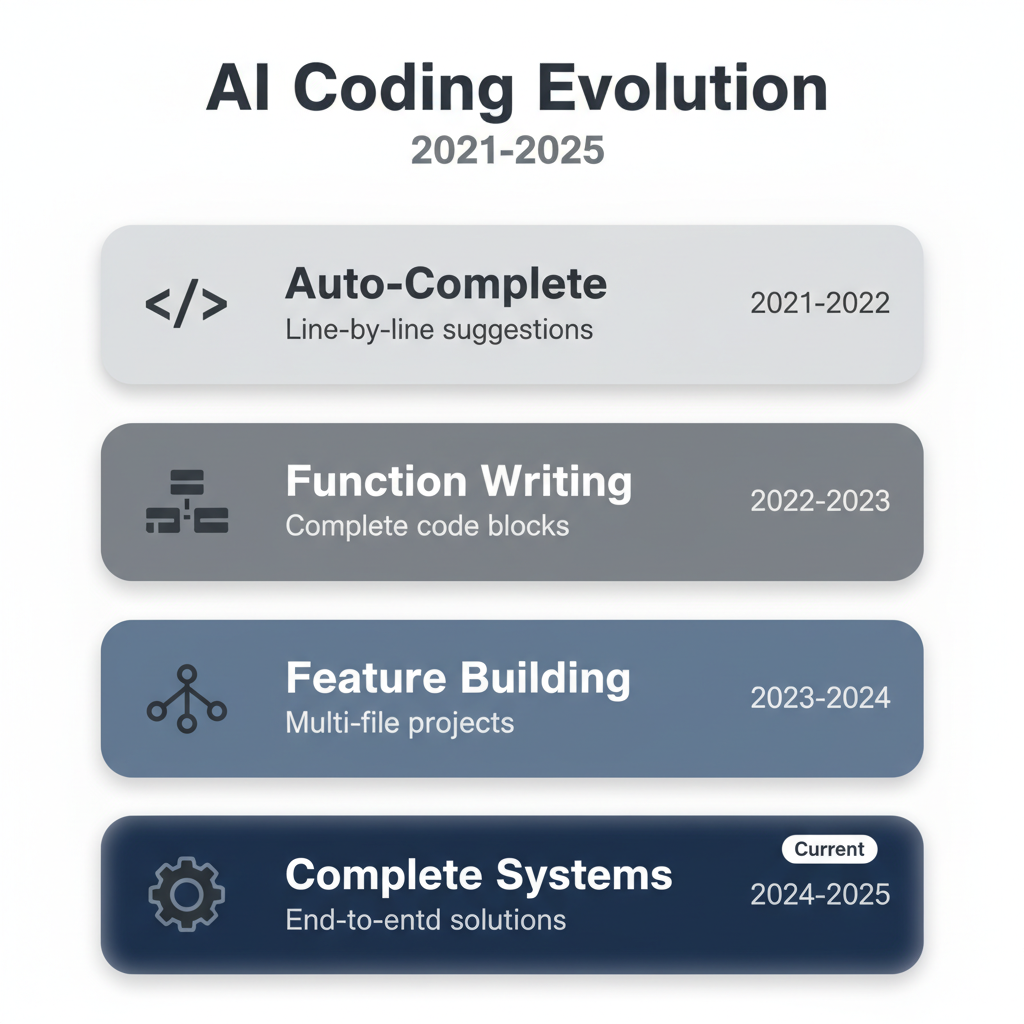

The Evolution of AI Coding Tools

Let's trace the progression over the past few years:

Generation 1: Intelligent Autocomplete (2021-2022)

Example: GitHub Copilot (initial release)

Capability: Suggest the next few lines of code based on context

Human role: Write comments or start typing, review suggestions, accept or reject

Limitation: Operates at the level of individual code fragments. Can't implement complete functions or reason about multi-step logic.

Paradigm: Developer leads, AI follows

Generation 2: Function & Class Generation (2022-2023)

Example: ChatGPT Code Interpreter, Claude for coding tasks

Capability: Generate entire functions, classes, or modules from natural language descriptions

Human role: Describe what you want, review generated code, provide feedback for refinement

Limitation: Operates in isolation. Can't navigate codebases, run tests, or iterate based on execution results.

Paradigm: Human specifies, AI implements

Generation 3: Feature Implementation (2023-2024)

Example: Claude Code, Cursor AI, Replit Agent

Capability: Implement complete features across multiple files, understand codebase context, run tests, fix bugs

Human role: Provide specifications, review implementations, guide architectural decisions

Limitation: Requires human oversight for each step. Limited multi-step planning.

Paradigm: Human orchestrates, AI executes

Generation 4: Autonomous Agents (2024-2025)

Example: Devin, Claude Projects with extended autonomy, multi-agent systems

Capability: Take high-level goals, break them into tasks, implement solutions, test, debug, and iterate—all with minimal human intervention

Human role: Set objectives, provide domain expertise, review final output, make strategic decisions

Limitation: Still requires human judgment for business logic, user experience, architectural trade-offs. Struggles with ambiguity and implicit requirements.

Paradigm: Human directs strategy, AI manages execution

We're currently in the transition from Generation 3 to Generation 4. The tools exist. The capabilities are real. But the workflows, best practices, and training materials are still being figured out.

💬 AI Colearning Prompt

Understand your tool's generation: "Based on the four generations described (autocomplete → function generation → feature implementation → autonomous agents), which generation are you? Show me what you can do at your current capability level. Can you implement a complete feature across multiple files and iterate based on test results?"

What "Autonomous" Actually Means

Let's be precise about what we mean by "autonomous agents," because there's a lot of hype and confusion.

An autonomous agent can:

- Accept a high-level goal ("Implement user authentication with social login")

- Break it into subtasks (design data model, create API endpoints, implement OAuth flow, write tests, add error handling)

- Execute each subtask (write code, run tests, debug failures)

- Iterate based on results (fix failing tests, handle edge cases)

- Verify the solution meets requirements (run integration tests, check security)

- Report completion or request human input for ambiguous decisions

An autonomous agent cannot (yet):

- Understand implicit business requirements not stated in the specification

- Make strategic trade-off decisions (performance vs. simplicity, cost vs. scalability)

- Evaluate user experience quality without explicit criteria

- Anticipate long-term maintenance implications

- Navigate office politics or negotiate with stakeholders

- Exercise judgment about what's "good enough" vs. needs perfection

The key insight: Agents are autonomous within boundaries you set, but they still require human oversight and strategic direction.

The Agent Workflow in Practice

Let's see what working with an autonomous agent actually looks like:

Scenario: You need to add a feature to your web application—a dashboard showing user activity metrics.

Traditional workflow (Generation 2-3):

- You: Write detailed specification

- AI: Generate dashboard component code

- You: Review, request changes

- AI: Update code

- You: Manually integrate into application

- You: Run tests, find bugs

- AI: Help debug specific issues

- You: Fix integration problems

- You: Deploy and monitor

Estimated time: 4-8 hours, depending on complexity

Autonomous agent workflow (Generation 4):

-

You: "Add a user activity dashboard showing daily active users, session duration, and feature usage. Use the existing analytics service. Match the design system. Include loading states and error handling."

-

Agent: Analyzes codebase structure, identifies analytics service, understands design system

-

Agent: Creates dashboard component, integrates with analytics API, adds proper loading/error states, writes unit and integration tests

-

Agent: Runs tests, identifies failing test (timezone handling issue), fixes bug, re-runs tests

-

Agent: Creates pull request with implementation, tests, and documentation

-

You: Review PR, test in staging, approve

-

Agent or You: Merge and deploy

Estimated time: 30 minutes of your active attention, with agent working autonomously for implementation

The time savings aren't just about speed—they're about cognitive load. You're not context-switching between specification, implementation, testing, and debugging. You stay at the strategic level.

Multi-Agent Systems: The Next Frontier

The most interesting developments aren't single agents—they're teams of specialized agents working together:

Architecture:

- Planning Agent: Breaks down goals into tasks

- Implementation Agent: Writes code

- Testing Agent: Generates tests and validates correctness

- Review Agent: Checks code quality, security, performance

- Documentation Agent: Creates technical documentation

- Orchestrator: Coordinates the team and manages workflow

Why this matters:

Just like human teams benefit from specialization, agent teams can be more effective than single generalist agents:

- Testing Agent can be more thorough because it's not biased by having written the code

- Review Agent can check security without time pressure to "just make it work"

- Documentation Agent focuses on clarity without implementation concerns

The challenge is coordination: ensuring agents work together smoothly and don't produce conflicting outputs.

What Won't Change: The Need for Human Judgment

Here's the part that's easy to miss in all the hype:

Even in the full autonomous agent era, human developers remain essential.

Why? Because software development isn't just coding—it's:

- Understanding user needs (often unstated or poorly articulated)

- Making trade-off decisions (fast vs. scalable, simple vs. flexible)

- Balancing constraints (time, cost, technical debt, team capacity)

- Ensuring quality (not just "works" but "works well" for real users)

- Navigating ambiguity (when requirements conflict or are unclear)

- Exercising creativity (finding elegant solutions to novel problems)

Agents can help with all of these—but they can't replace human judgment, domain expertise, and strategic thinking.

Consider an analogy: Autonomous vehicles. We have self-driving cars today that handle most driving tasks. But we still need human drivers for:

- Navigating unusual situations (construction zones, emergency vehicles)

- Making ethical decisions (trolley problem scenarios)

- Understanding context (driving through a neighborhood where kids play)

- Taking responsibility (legal and moral accountability)

The same applies to autonomous coding agents. They'll handle implementation, but humans provide direction, judgment, and accountability.

🎓 Expert Insight

Autonomy doesn't mean unsupervised. It means self-executing within boundaries you define. Like cruise control: it maintains speed autonomously, but you still steer and choose the destination. The value is in defining good boundaries.

Preparing for the Agent Era

The good news: You don't need to wait for the future to arrive. The skills that will matter in the autonomous agent era are the same skills that matter today:

- Specification writing: Agents are only as good as the goals you give them

- Architecture thinking: Agents implement your designs, not create them

- Code review: You validate agent output for correctness and quality

- System understanding: You orchestrate how components work together

- Domain expertise: You provide business and user context agents lack

This book teaches these skills intentionally. You're not learning "how to code in 2024"—you're learning how to thrive in an AI-augmented development landscape that will continue evolving for the next decade.

The Opportunity in Transformation

Here's the final insight for this section:

Every major transition creates opportunity for those who adapt early.

When mobile apps became mainstream, developers who learned iOS and Android early had a massive advantage. When cloud computing emerged, engineers who understood distributed systems and infrastructure-as-code led the transformation.

The autonomous agent era is creating a similar opportunity window—and it's open right now.

🤝 Practice Exercise

Ask your AI: "Which generation of AI tool are you? Can you autonomously break down a goal like 'build user authentication,' execute multiple steps, run tests, and iterate until it works? Or do you need me to guide each step?"

Goal: Understand your current tool's capabilities realistically.

In the next section, we'll explore why this is arguably the best time in decades to be learning software development, regardless of whether you're a beginner or making a career transition.

These videos provide additional context on the AI coding revolution and where the industry is headed.

Try With AI

Use your AI companion tool set up (e.g., ChatGPT web, Claude Code, Gemini CLI), you may use that instead—the prompts are the same.

Prompt 1: Understand Current Tool Capabilities

This lesson describes four 'generations' of AI coding tools, from autocomplete to autonomous agents. Help me understand where we are TODAY (2025) and what I should expect when I start using AI tools. What generation will I be working with? What can it actually do?

Expected outcome: Realistic understanding of current AI tool capabilities (not science fiction).

Prompt 2: Set Autonomous Agent Expectations

The lesson defines 'autonomous' agents—what they CAN and CAN'T do. This is important: help me set realistic expectations. If I give an AI agent a task like 'build me a simple portfolio website,' what parts will it handle autonomously? Where will it need my help?

Expected outcome: Clear expectations about what autonomous agents will and won't do for you.

Prompt 3: Assess Learning Timing

I'm curious about the timeline: when will autonomous agents be 'mainstream'? The lesson predicts 2025-2027. But what does that mean for me learning NOW? Should I wait for the tools to mature, or start learning with today's Generation 3 tools? Give me your honest recommendation.

Expected outcome: Timing guidance: whether to start now or wait for better tools.

Prompt 4: Identify Human Judgment Areas

The lesson emphasizes 'human judgment remains essential.' Give me 3-5 concrete examples of decisions or judgment calls AI CAN'T make (that I'll need to handle). This will help me understand where I add value even in the 'autonomous agent era.'

Expected outcome: Concrete examples of where human judgment beats AI (your unique value).