The Development Lifecycle in Transition

Lesson Video:

Most discussions about AI coding focus on one thing: writing code. GitHub Copilot autocompletes your functions. Claude Code implements features from specifications. ChatGPT debugs your errors.

But code generation is just one piece of a much larger transformation. Every phase of the software development lifecycle—from initial planning through production operations—is being reshaped by AI tools.

Understanding this systemic change helps you see why this isn't just about "coding faster." It's about fundamentally rethinking how software gets built.

A Fundamental Realization

As AI tools transform every phase of development, a profound insight emerges:

Code is becoming a regenerable artifact, not the primary asset.

When specifications are clear and AI agents handle implementation, you can regenerate code on demand. Change a requirement? Regenerate. Migrate frameworks? Regenerate. Refactor for performance? Regenerate.

What becomes durable?

- Your specifications (clear articulation of what the system should do)

- Your architectural decisions (how components interact and scale)

- Your domain expertise (understanding of the problem space)

- Your patterns and practices (reusable intelligence that compounds)

What becomes temporary?

- The specific code implementation (can be regenerated from specs)

- Framework-specific syntax (can be migrated with AI assistance)

- Boilerplate and repetitive patterns (automated through intelligent generation)

This is the shift from "reusable code" to "reusable intelligence." We'll explore what this means for your development practice throughout this book—starting with how specifications become your primary development artifact (Chapter 17).

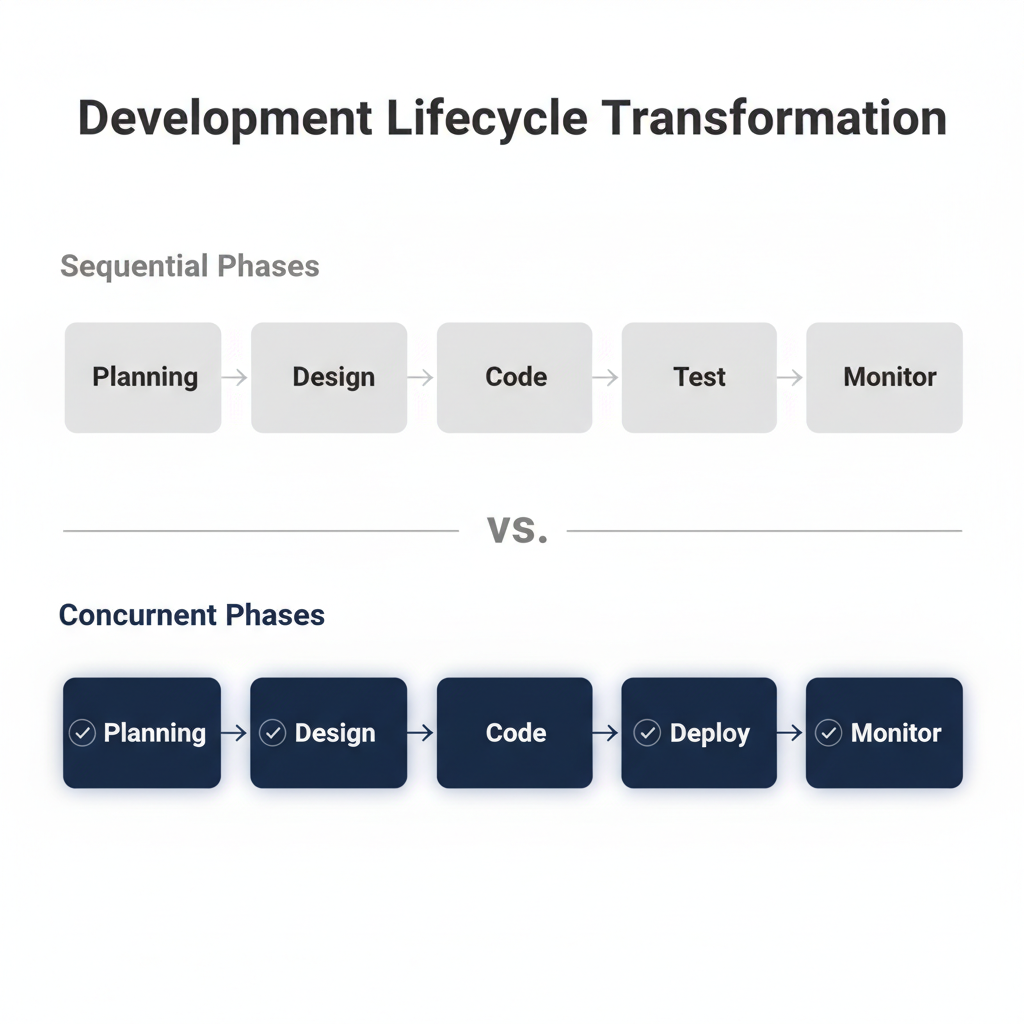

The Traditional Development Lifecycle

Let's establish a baseline. The traditional software development lifecycle looks something like this:

Planning → Design → Implementation → Testing → Deployment → Operations

Or if you prefer agile terminology:

Discovery → Architecture → Development → QA → Release → Maintenance

The names vary by methodology (Waterfall, Agile, DevOps), but the fundamental phases remain consistent. You figure out what to build, design how to build it, write the code, verify it works, ship it to users, and keep it running.

Each phase traditionally required different skills, different tools, and different specialists:

- Product managers handled planning

- Architects designed systems

- Developers wrote code

- QA engineers tested functionality

- DevOps engineers managed deployment

- SREs handled operations

AI is transforming all of these phases simultaneously. Let's walk through each one.

Phase 1: Planning & Requirements

Traditional approach:

- Product managers write user stories and acceptance criteria

- Business analysts translate requirements into technical specs

- Stakeholders review documents in endless meetings

- Ambiguities and edge cases emerge during implementation (too late)

AI-augmented approach:

- Natural language processing helps extract requirements from conversations, emails, and documents

- AI agents suggest edge cases and failure scenarios humans might miss

- Automated analysis identifies inconsistencies and ambiguities before development starts

- AI-generated prototypes help stakeholders visualize requirements before committing to implementation

Real example: Organizations using AI-assisted requirements analysis report finding more edge cases during planning, helping prevent bugs from reaching production and reducing rework cycles. A Thoughtworks case study found approximately 10% fewer bugs during testing due to better edge case coverage in AI-enhanced story definitions.

What this means for you: If you're learning AI-driven development, you need to understand how to work with AI planning tools to surface requirements, not just write them manually. You need to learn prompt engineering for requirements elicitation, not just documentation formatting.

🎓 Expert Insight

Notice the shift: traditional planning focused on documenting requirements. AI-era planning focuses on asking better questions—letting AI help you discover edge cases and ambiguities before implementation. This is co-learning in action: AI as collaborator, not just transcriber.

Phase 2: Architecture & Design

Traditional approach:

- Senior engineers sketch system designs in meetings or whiteboards

- Architecture documents get written (and often go out of date)

- Trade-off analysis happens based on individual experience

- Decisions get made, sometimes without considering alternatives

AI-augmented approach:

- AI systems suggest architectural patterns based on requirements

- Multiple design alternatives generated automatically for comparison

- Trade-off analysis includes performance projections, cost estimates, scalability implications

- Architecture documentation stays synchronized with code (AI can update docs when implementation changes)

Real example: Anthropic engineers use Claude to explore architectural alternatives when building features. The AI generates 3-5 different approaches, explains trade-offs, and highlights considerations the team might have missed. This doesn't replace human judgment—it augments it with broader exploration of the design space.

What this means for you: Architectural skills become more important, not less. AI can generate alternatives, but you need the judgment to evaluate them. You need to understand distributed systems, security principles, scalability patterns—not just syntax.

Phase 3: Implementation (Code Writing)

Traditional approach:

- Developer reads specification

- Developer writes code line by line

- Developer manually implements boilerplate, error handling, logging

- Developer searches Stack Overflow when stuck

- Developer debugs compilation errors and runtime bugs

AI-augmented approach:

- AI generates initial implementation from specification

- Developer reviews, refines, and iterates with AI assistance

- AI handles boilerplate, standard patterns, error handling automatically

- AI suggests solutions based on codebase context, not generic Stack Overflow answers

- AI catches bugs during writing, before compilation

Productivity impact:

Enterprise organizations using AI tools report productivity gains ranging from modest to substantial. Google's 2024 DORA research (surveying 39,000+ professionals) found that over a third of developers experienced moderate to extreme productivity increases, though the overall impact varies by context and implementation approach. Individual success stories from GitHub, Anthropic, and enterprise deployments demonstrate significant time savings for standard features and debugging tasks.

What this means for you: Your value shifts from "typing speed" to "design quality and code review rigor." You become an orchestrator and editor, not primarily an author.

💬 AI Colearning Prompt

Explore architectural thinking: "I need to build a system that processes 10,000 user uploads per hour. Generate 3 different architectural approaches (serverless, containerized, hybrid) and compare their trade-offs for cost, scalability, and maintenance."

Phase 4: Testing & Quality Assurance

Traditional approach:

- QA engineer writes test plan manually

- Unit tests written by developers (often incomplete)

- Integration tests written by separate QA team

- Manual testing for edge cases and user workflows

- Bugs found late in cycle, expensive to fix

AI-augmented approach:

- AI generates comprehensive test suites from requirements and code

- Automatically identifies edge cases developers didn't think to test

- Generates test data that exercises realistic scenarios

- Predicts likely bug locations based on code patterns and complexity metrics

- Continuous testing with AI-powered exploratory testing

Real example: A fintech company used AI-generated test cases to achieve 95% code coverage (up from 60% with manual testing). More importantly, AI identified 40% more edge cases than human testers specified—preventing production bugs.

What this means for you: Testing becomes a conversation with AI tools about "what could go wrong" rather than manually writing assertions. You need to understand what to test (boundary conditions, failure modes, security vulnerabilities), not just how to write tests.

Phase 5: Deployment & Release

Traditional approach:

- DevOps engineer writes infrastructure-as-code (Terraform, CloudFormation)

- Deployment scripts maintained manually

- Rollout strategies planned based on past experience

- Monitoring configured for expected failure modes

AI-augmented approach:

- AI generates infrastructure configuration from requirements

- Deployment pipelines optimized automatically based on application characteristics

- AI-powered canary analysis detects anomalies during rollout

- Rollback decisions assisted by AI analysis of metrics and logs

Real example: Netflix uses AI-powered deployment systems like Kayenta that analyze hundreds of metrics during canary releases. The system automatically rolls back if anomalies are detected. Similar canary analysis systems at Waze prevent approximately 25% of all incidents, including most user-facing incidents, before they affect customers.

What this means for you: You need to understand infrastructure concepts, monitoring strategies, and failure modes—but AI handles configuration details. Focus on "what good looks like" rather than memorizing command syntax.

Phase 6: Operations & Maintenance

Traditional approach:

- SREs monitor dashboards for anomalies

- On-call engineers respond to alerts manually

- Root cause analysis done through log inspection and correlation

- Performance optimization based on profiling and experimentation

AI-augmented approach:

- AI continuously analyzes telemetry and predicts failures before they occur

- Automated remediation for common issues (restart unhealthy services, scale resources)

- AI-assisted root cause analysis correlates logs, metrics, and traces

- Performance recommendations based on observed patterns and industry best practices

What this means for you: Operations becomes proactive and predictive rather than reactive. You interpret AI insights and make strategic decisions about system health rather than manually triaging incidents.

🎓 Expert Insight

AI doesn't eliminate the lifecycle. It compresses it. What took weeks now takes days, but you still need planning, testing, deployment, and monitoring. The sequence stays. The speed changes. This is why understanding the full cycle matters more than ever.

The Compounding Effect

Here's what makes this transformation particularly powerful:

Each phase improvement compounds with others.

When AI helps you identify edge cases during planning, you write better requirements. Better requirements lead to better architecture. Better architecture makes implementation easier. Easier implementation produces fewer bugs. Fewer bugs mean smoother deployments. Smoother deployments reduce operational burden.

The entire development lifecycle becomes:

- Faster (weeks become days, days become hours)

- Higher quality (more edge cases caught, fewer bugs shipped)

- More predictable (fewer surprises, better estimation)

- More maintainable (better documentation, clearer architecture)

What This Means For Traditional Roles

The transformation across all phases raises an uncomfortable question: What happens to specialized roles?

If AI handles:

- Requirements analysis (PM work)

- Architecture design (senior engineer work)

- Code implementation (developer work)

- Test generation (QA work)

- Infrastructure configuration (DevOps work)

- Incident response (SRE work)

...do we still need all these specialists?

The answer isn't straightforward:

Some specializations are converging. The boundaries between "developer," "QA engineer," and "DevOps engineer" are blurring. AI tools enable individual contributors to handle responsibilities that previously required dedicated specialists.

But high-level expertise becomes more valuable. You still need people who deeply understand distributed systems, security, performance optimization, and user experience. The difference is they spend less time on mechanical implementation and more time on strategic decisions.

And new specializations are emerging. Prompt engineering, AI orchestration, agent supervision—these weren't job categories three years ago. Now they're becoming critical skills.

The key insight: The development lifecycle isn't going away. It's being transformed. Every phase still requires human judgment, creativity, and domain expertise. But the mechanical aspects of each phase—the boilerplate, the repetitive work, the standard patterns—are being automated.

Your job is to figure out which side of that divide your skills fall on—and if they're on the mechanical side, to develop the judgment skills that AI can't automate.

🤝 Practice Exercise

Ask your AI: "Walk me through building a simple blog application, but explain what happens at each lifecycle phase (planning, design, implementation, testing, deployment, operations). Where do I make decisions, and where does AI execute?"

Goal: Understand the full cycle, not just coding.

In the next section, we'll explore this evolution in detail: how the developer role is changing from typist to orchestrator, and what that means for your career.

Try With AI

Use your AI companion tool set up (e.g., ChatGPT web, Claude Code, Gemini CLI), you may use that instead—the prompts are the same.

Prompt 1: Map The Full Development Process

This lesson says AI is transforming 'every phase' of development—not just writing code. Help me understand: If I want to build a simple web app (like a to-do list or small business tool), walk me through each phase and explain where AI can help me. Use simple language, not technical jargon.

Expected outcome: Clear mental model of the full development process (not just "coding").

Prompt 2: Avoid Dependency Traps

I'm concerned about one thing: If AI handles so much of the work (planning, testing, deployment, etc.), will I actually understand what I'm building? Or will I just be clicking buttons without learning? Give me an honest answer and suggest how to avoid this trap.

Expected outcome: Honest guidance on learning WITH AI without becoming dependent on it.

Prompt 3: Deep Dive One Phase

Pick ONE phase from the development lifecycle (maybe testing or deployment—whichever is most helpful for beginners). Explain it in detail: what it means, why it matters, and give me ONE specific way I can use AI to do it better as I'm learning.

Expected outcome: Deep understanding of at least ONE phase where you can apply AI immediately.

Prompt 4: Understand Compounding Effects

The lesson mentions the 'compounding effect'—each phase improvement makes the next phase easier. Explain this with a concrete example. If I do planning better with AI, how exactly does that make my testing easier later?

Expected outcome: Appreciation for how doing early work well pays off later.